AI Security (AISec) - A Threat Capability Matrix

Quantifying AI Threat Capability

In the rapidly evolving landscape of artificial intelligence (AI), distinguishing between hypothetical threats and tangible AI Security (AISec) threats has never been more critical. As AI technologies grow more sophisticated, so too do the potential security threats they pose, from deepfakes disrupting public understanding and shaping beliefs, to AI-powered cyber-attacks targeting critical infrastructure. However, not all threats carry the same weight of immediacy or potential for harm. Amidst a sea of speculation and sensationalism, it becomes paramount to separate fearmongering from genuine threats. This distinction is not merely academic; it is a crucial step in allocating our collective resources wisely, focusing our defence resources, build the right defensive capabilities, and spend money judiciously when preparing for the future of cybersecurity in an AI-driven world. – we must adopt are more scientific approach to understanding cybersecurity risks.

Additionally, it's vital to acknowledge that threat actors often demonstrate considerable sophistication in their operations, methodically linking a sequence of capabilities into series of stages known as the "kill chain." It is essential that we can track both the capability development levels and stages of the kill chain to understand the threat and ensure we can build the right AI security defensive capabilities.

In this article we introduce the concept of the AI Security Threat Capability Matrix as a tool to navigate through the complex landscape of AI security threats. By organising and plotting the AI Security threat capabilities of malicious threat actors - alongside each stages of the kill chain - the more precisely we can identify and differentiate AI threat vectors, ranging from theoretical ideas to those actively being exploited.

This approach will facilitate a structured method for comprehending and mitigating AI security risks, improving our capacity to address both current and future challenges in a timely and effectively manner. Such mapping is not only practical but essential, steering cybersecurity professionals, strategic leaders, policymakers, and the broader tech community in their efforts to prioritise and effectively respond to AI security threats. However, before we begin, it might be beneficial for me to explain the term AI Security (AISec).

What is AI Security (AISec)?

AI Security can be described as the range of tools, strategies, and processes employed to identify and prevent security attacks that could jeopardise the Confidentiality, Integrity, or Availability (CIA) of an AI model or AI-enabled system. It is a crucial element of the AI design and development cycle, ensuring safe and consistent performance throughout its operation (see AI Secure-by-Design). In addition to traditional cybersecurity vulnerabilities, the integration of AI into systems introduces new threat vectors and vulnerabilities, necessitating a fresh set of security protocols. The identification and mitigation of these vulnerabilities within AI-enabled systems are fundamental to AI security, demanding both technical and operational responses. A threat centric approach using the Capability Matrix will facilitate critical decisions that need to be made by leaders to triage and prioritise resources to mitigate against these threats.

Why do we need to understand Threat Capability Readiness Levels?

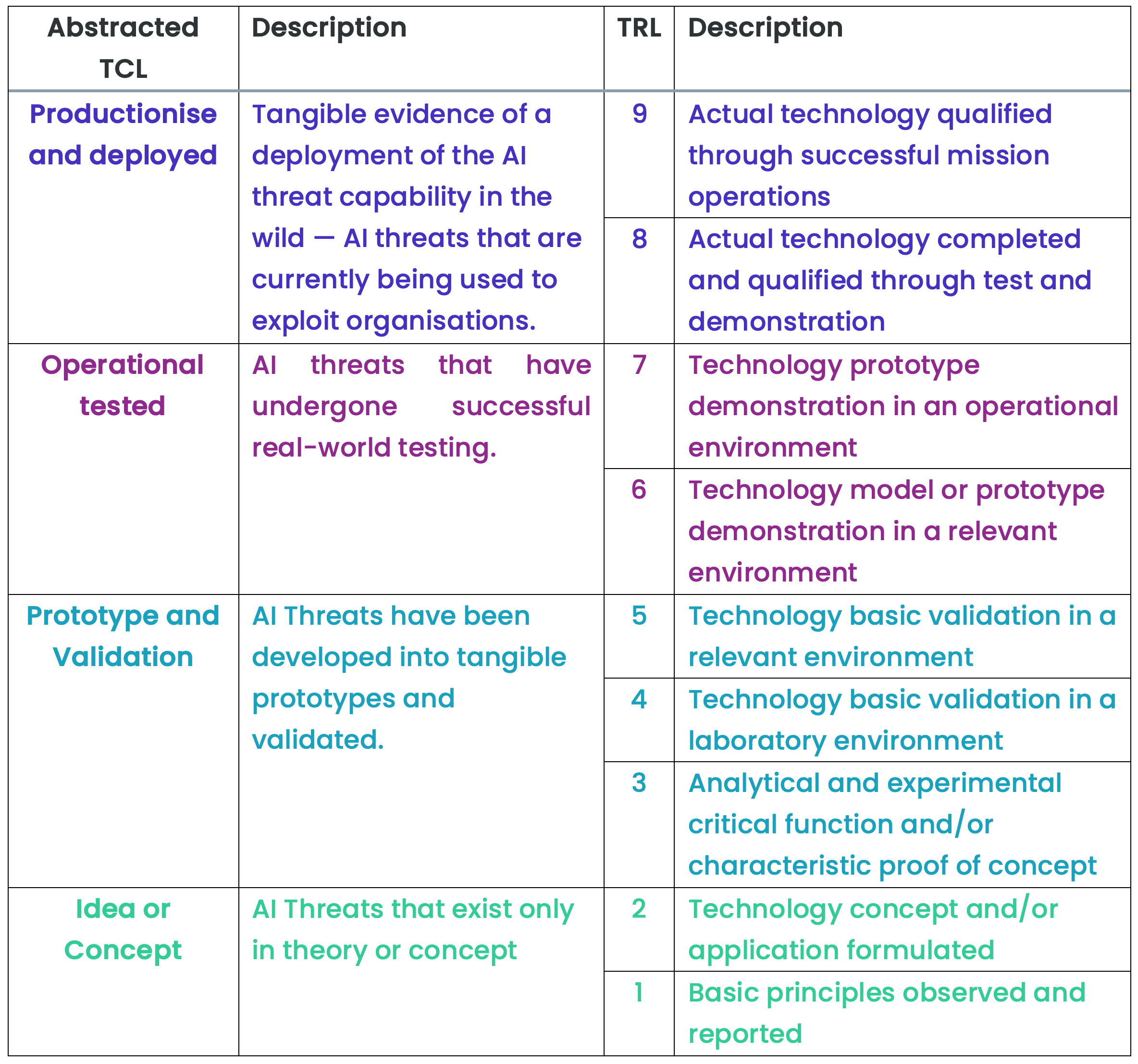

By adopting a threat centric evidence-based methodology, we can examine each AI threat vector based on its current capability, separating what is theoretically possible from what is empirically evident. This approach provides a method to distinguish between four distinct stages of AI threat capability development:

1) Idea or Concept — AI threats that exist only in theory,

2) Prototype and Validation — AI threats that have been developed into tangible prototypes and validated,

3) Operationally Tested — AI threats that have undergone successful real-world testing,

4) Productised and Deployed – Tangible evidence of a deployment of the AI threat capability in the wild — threats that are currently being used to exploit organisations.

The four abstracted AI Threat Capability Levels (TCL) have been mapped to what is known in Defence as the Technical Readiness Levels (TRL) a standard used to develop military warfighting capabilities. The purpose of adding this extra level of detail is primarily for those researchers or decision makers in need of a greater level of fidelity. Especially, when trying to understand the AI threat capabilities of State Actors or Serious Organised Crime.

Fig 1 – AI Threat Capability Levels

Through this lens, we aim to provide a clear, actionable framework that distinguishes between speculative dangers and immediate threats, enabling a more informed and effective response to the challenges posed by AI in the realm of cybersecurity.

Additionally, it's vital to acknowledge that threat actors often demonstrate considerable sophistication in their operations, methodically linking a sequence of capabilities to target specific entities. Within the sphere of cyber threat intelligence, this coordinated series of actions is typically referred to as a "threat campaign." This concept is articulated through a series of stages known as the "kill chain." This framework aids in breaking down and comprehending the step-by-step process adversaries employ to execute cyber espionage or attacks. Thereby enabling defenders to foresee, identify, and build defence capabilities to respond effectively more effectively. This research adopts the MITRE ATLAS ATT&CK Framework as a kill chain reference.

What is the MITRE ATLAS ATTACK framework?

The MITRE ATLAS ATT&CK matrix is a AI security to understand how each AI threat vector maps to a kill chain from early reconnaissance to the effect or impact on target. The ATLAS AI Security to enables for a comprehensive understanding mapping to the more sophisticated AI security cyber threats.

Here's an overview of each threat attack or kill chain stage:

Reconnaissance

Adversaries aim to collect information about the AI system they are targeting to plan their future operations. Reconnaissance involves techniques where adversaries actively or passively gather information that supports targeting, such as details of the victim organisation's AI capabilities and research efforts. This information can assist the adversary in other lifecycle phases, like obtaining relevant AI artifacts or tailoring attacks to the victim's specific models.

Resource Development

Adversaries seek to establish resources to support their operations. This includes creating, purchasing, or compromising resources like AI artifacts, infrastructure, accounts, or capabilities. These resources aid in targeting and are pivotal for stages like AI Attack Staging.

Initial Access

The goal here is for the adversary to enter the AI system, which could be network-based, mobile, or an edge device like a sensor platform with local or cloud-enabled AI capabilities. Techniques involve various entry vectors to establish a foothold within the system.

AI Model Access

Adversaries attempt to gain varying levels of access to an AI model, which can range from full knowledge of the model's internals to accessing the physical environment for data collection. Access might be direct, through system breaches, public APIs, or indirectly through product or service interaction. This access facilitates information gathering, attack development, and data input.

Execution

The aim is to run malicious code embedded in AI artifacts or software, resulting in adversary-controlled code executing on systems. Techniques often pair with other tactics for broader goals like network exploration or data theft, utilising remote access tools or scripts for additional discovery.

Persistence

Adversaries work to maintain their presence, using techniques that ensure continued access across restarts or credential changes. This often involves leaving behind modified AI artifacts like poisoned data or backdoored models.

Privilege Escalation

The objective is to obtain higher-level permissions, exploiting system weaknesses, misconfigurations, and vulnerabilities to gain elevated access such as SYSTEM/root level or local administrator privileges. These techniques frequently overlap with persistence methods.

Defence Evasion

Adversaries strive to avoid detection by AI-enabled security software, employing techniques to bypass malware detectors and similar security solutions, often overlapping with persistence strategies to maintain stealth.

Credential Access

This stage involves stealing credentials like account names and passwords through keylogging, credential dumping, or other methods, facilitating system access and making detection more challenging.

Discovery

Adversaries seek to understand the AI environment, using techniques to learn about the system and network post-compromise. This helps in planning subsequent actions based on the environment's characteristics and potential control points.

Collection

The focus is on gathering AI artifacts and information relevant to the adversary's goals, targeting sources like software repositories or model repositories for data that can be stolen or used to stage future operations.

AI Attack Staging

Adversaries prepare their attack on the target AI model, using their knowledge and access to tailor the assault. Techniques include training proxy models, poisoning the target model, and crafting adversarial data, often executed offline to evade detection.

Exfiltration

The goal is to steal AI artifacts or information, employing techniques to transfer data out of the network. This might involve using command and control channels or alternate methods, possibly with size limits to avoid detection.

Impact

Adversaries aim to steal Intellectual Property (IP), manipulate, disrupt, undermine confidence in, or destroy AI systems and data. Techniques can include data destruction or tampering and might be employed to achieve end goals or cover other breaches, potentially altering business processes to benefit the adversary's objectives.

Now that we have a method method to evaluate the AI Security Threat Capability Levels of an AI threat – from concept or idea to production and deployment, and a framework to understanding of each step of the ATLAS kill chain – needed to map sophisticated attack chains. We now possess an AI Security Threat Capability Matrix – see below. This matrix now provides us with the capability to align each AI cyberattack threat vector with a specific stage of the targeting process aimed at exploiting AI systems and we can separate what is theoretically achievable from what can practically be achieve by a threat actor.

Figure 2 – AI Security Threat Capability Matrix

How to use the AI Security Threat Capability Matrix?

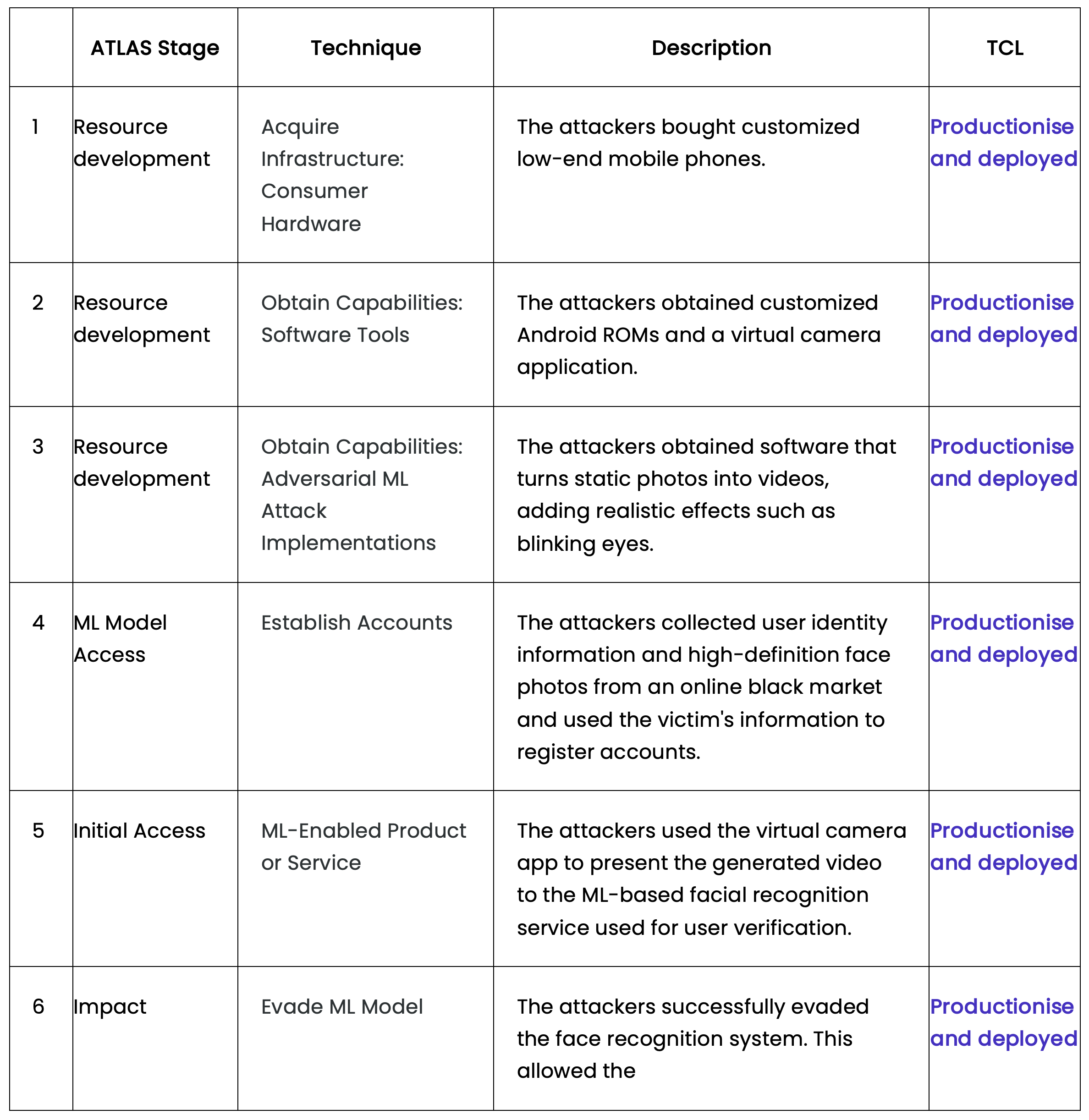

For this example, we will use a case study where a AI Security vulnerability was exploited at a cost of $77 million to a government organisation. Camera Hijack Attack on Facial Recognition System

Figure 3 - ATLAS Kill Chain stages.

How can this help me?

This example illustrated a successful breach of an AI facial recognition authentication system, allowing a malevolent threat actor to gain entry, manipulate an AI model to facilitate their attack, and abscond with $77 million.

Employing this methodology, in forthcoming articles, I shall present additional case studies on cyberattacks against AI systems. That said, in other case studies - utilising the Threat Capability Matrix - I intend to show that some AI Security breaches are purely theoretical, or have only been corroborated in a laboratory environment, underscoring the absence of evidence for these capabilities being exploited in the wild on the internet. My objective is to employ the Threat Capability Matrix to expose the current threat capabilities that your organisation must be prepared to address and recover from, and which capabilities are speculative and should be incorporated into the development of your AI Secure by design capabilities.

What are the benefits of an AI security Threat Capability Matrix?

Understanding the Threat Capability Matrix is crucial in cybersecurity for various reasons, particularly considering the complex and evolving nature of AI Security threats today.

Here are the key benefits an AI Security Threat Capability Matrix:

Inclusivity and Standardisation of Language

· Enhances Communication: Adopting a standardised approach to defining threat capabilities allows for clearer communication among cybersecurity professionals, developers, and AI systems. This shared language ensures that both human analysts and automated systems can consistently understand and interpret threat data.

· Facilitates AI Integration: AI and machine learning models in cybersecurity stand to gain significantly from standardised definitions within the threat capability matrix. Such models can automatically categorise threats within a cybersecurity data fabric, boosting the speed and precision of threat detection and response. Standardised levels within the matrix mean these models can be trained more effectively on uniform data sets, enhancing their capability to spot and classify emerging threats.

· Encourages Collaborative Defence: A scientifically robust approach to the threat capability matrix fosters collaboration across various sectors and industries. This mutual effort allows for the exchange of threat intelligence and defensive strategies, strengthening the collective cybersecurity posture.

Distinguishing Theoretical vs Actual Capabilities

· Prioritises Actual Threats: Understanding the matrix aids in differentiating between theoretical threats and those that threat actors are currently equipped to execute. This is vital for focusing defences on the most probable and damaging attacks.

· Improves Threat Intelligence: A scientific, threat-focused method of classifying capabilities within the matrix enables organisations to more accurately evaluate the risk posed by different threat actors. Knowing what adversaries are capable of allows organisations to tailor their defences more precisely to the real threat environment.

· Optimises Resource Allocation: Being aware of the present capabilities of threat actors allows organisations to distribute their cybersecurity resources more effectively. By concentrating efforts on immediate threats, organisations can improve their defence against actual, as opposed to hypothetical, attacks.

Facilitating Scientific, Integrated Defence Strategies

· Bolsters Decision Support Systems: Categorising threats within the matrix helps in developing decision support systems that guide both humans and machines in making informed threat response decisions. This amalgamation of human insight and machine intelligence fosters a cybersecurity stance that is both resilient and agile.

· Guides Defence Capability Development: A clear understanding of the matrix empowers cybersecurity teams to create more focused and potent defence mechanisms. Preparedness for potential threat escalations ensures that defences are proactive, not just reactive.

· Encourages a Proactive Security Approach: A scientific strategy to grasp the threat capability matrix prompts a shift from reactive to proactive security measures. Anticipating the progression of threat actor capabilities, organisations can ready their defences in advance, diminishing the likelihood of successful attacks.

In summary, comprehending the AI Security Threat Capability Matrix is key to constructing a more inclusive, scientifically informed, threat centric and action-oriented cybersecurity defence framework. It narrows the gap between theoretical risks and actual threat landscapes, allowing for the development of more efficient, integrated defence strategies that capitalise on both human expertise and AI technology. This proactive stance not only enhances defences against current threats but also lays the groundwork for mitigating future AI Security risks.